Abstract: In order to explore the large amount of data to be transmitted in the multimedia surveillance system, the performance of the CDN network is degraded due to the limited storage space of the proxy server. The semi-synchronous/semi-asynchronous mode is used to design the framework. Combined with the task pool and thread pool technology, the transmission subsystem between the media resource server and the original server in the CDN streaming media system based on P2P is designed and implemented. An effective dynamic thread pool and task pool management algorithm combining task pool idle information and system-run parameter statistics. Dynamically estimate the current load and pressure of the system based on various operating parameter information, and dynamically modify the task pool and thread pool size. Compared with the experiments of the two traditional implementations, it is found that after adopting the new algorithm, the CPU load is significantly reduced, and the system efficiency and network throughput are effectively improved.

O Introduction

Peer-to-peer (Peer-to-peer) technology is a hot topic in the field of international computer network technology. The prototype of the technology was born in the 1970s, the typical representatives are UseNet and FidoNet; and the Content Distri-bution Network is to publish the content or media of the website to the "edge" of the network closest to the user. When the user accesses, the system automatically and seamlessly redirects the user to the edge server, thereby reducing the pressure on the central server and the backbone network, and improving the performance of the streaming media or the website.

With the rapid development of network technology, streaming media content is widely spread on the Internet, and the high-quality streaming media distribution service is more and more obvious. Therefore, providing fast and high-quality streaming media distribution services for a large number of users has become a recent research. Hot and difficult.

The amount of data to be transmitted in the multimedia surveillance system is quite large, mainly including: control information, feedback information, video, audio, and other such as text information. For the traditional multimedia monitoring system based on C/S mode or B/S mode, these massive streaming media data transmission between the monitoring point and the monitoring center will cause the server performance to plummet. Based on this article, the introduction of P2P technology into the design of the multimedia monitoring system has mainly improved as follows:

(1) Designed a monitoring transmission subsystem based on P2P and CDN.

(2) The customer obtains the service from the edge server by using the P2P mode, and the content distribution between the original server and the edge server is also performed by the P2P mode. In this way, the network bandwidth and the host resource in the system are effectively utilized, and the original server is lightened. The pressure on the edge server reduces the data traffic of the backbone network, reduces the cost of the operator, and improves the quality of service of the customer.

(3) In order to alleviate the contradiction between network I/O and disk I/O, the semi-synchronous/semi-asynchronous method is adopted in the design of the transmission subsystem to separate the network I/O from the disk I/O and pass the task pool. Buffer.

(4) The thread pool dynamic management algorithm is designed, which effectively reduces the load pressure of the CPU and improves the network throughput and overall system performance.

(5) Effectively improve the shortcomings of the traditional method, use the semi-synchronous/semi-asynchronous way to establish the system framework, use the task pool to encapsulate the read and write requests of data, and use the thread pool to efficiently and asynchronously the tasks in the task pool. deal with. Through the statistics of the idle status of the task and the current resource utilization of the system, the task pool and the thread pool are dynamically managed, which reduces the load of the CPU and improves the throughput of the system.

2 system framework

The overall layout of the system is shown in Figure 1. The edge server will form a P2P network with several client nodes, providing efficient service quality and reducing the load on the server.

When the client requests a resource miss on the edge server, the edge server requests the original server, and the original server stores the required media resources locally through the efficient transmission subsystem implemented by the text according to the specific request request, and then uses the P2P. Ways to publish content to multiple edge servers.

In this way, the pressure on the original server when content is released is effectively alleviated. In theory, it only needs to send a complete copy of the media, and other edge servers will get a complete copy according to P2P. Similarly, when an edge server provides services to a client, in theory it only needs to transmit one copy, and multiple clients can get a complete service. The original server and media resource server are usually in a subnet, and the network speed is faster than disk I/O. At this point, disk I/O is the bottleneck of the system. In order to alleviate the contradiction between network I/O and disk 1/O, the semi-synchronous/semi-asynchronous method is used to separate the network I/O from the disk I/O in the design of the transmission subsystem, and buffered by the task pool. .

The upper thread handles the epoll asynchronous event and protocol interaction. The framework encapsulates the received data in a fixed size and then puts the task back into the task pool. The lower thread pool is responsible for taking the task from the task pool and performing specific disk reads. Write operation, after the operation is completed, the thread and task return to the thread pool and the task pool respectively to wait for scheduling.

3 algorithm implementation

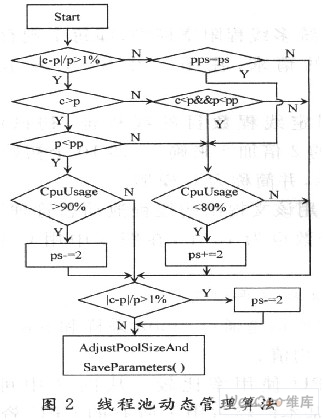

In order to effectively manage the thread pool dynamically, various performance parameters need to be collected. After comprehensive analysis, the thread pool is adjusted. The algorithm refers to the two most critical parameters, namely the average latency of the task and the CPU usage. Through the average waiting time of the task, you can analyze the direction that the current thread pool needs to be adjusted. Through the CPU usage, you can get whether you need to increase or decrease the thread.

In Figure 2, c(current) represents the current average waiting time of the thread pool; p(previous) represents the last waiting time of the thread pool; pp represents the last waiting time; ps (pool size) represents the thread pool size; pps represents the last thread pool. size. The algorithm does not compare the absolute value of the waiting time, but compares currTime and preTime. If the difference is greater than 1%, the thread pool may need to be adjusted. The adjustment direction needs to be determined according to the size relationship of currTime and preTime. If currTime is greater than preTime, the relationship between pre-Time and prepreTime needs to be further compared; if preTime is less than prepreTime and the CPU usage is greater than 90%, then the thread pool is reduced. The reduced step size is 2. If preTime is greater than prepreTime and the CPU usage is less than 80%, increase the thread pool by an increment of 2. If currTime is less than preTime and preTime is less than prepreTime, then the thread pool is incremented.

In short, the algorithm passes currTime, preTime, prepre-

The relationship between the three is compared to determine if the thread pool needs to be adjusted.

When it is necessary to reduce the thread pool, it is necessary to further judge the CPU usage. Only when the CPU is greater than a threshold, the reduction operation is performed, because the CPU load is too small, which is a waste of resources; similarly, when it is necessary to increase the thread pool At the same time, the increase operation can only be performed when the CPU is less than a threshold because the CPU load cannot be too large.

4 Experimental analysis

Because the media resource server and the original server are mostly in the same subnet, the experimental environment is also simulated by a LAN. The basic configuration of the server is: two Intel dual-core Xeon 3 GHz chips, 2 048 KB cache, 4 GB memory, 1 000 Mb/s network card.

4.1 Experimental data of three models

The experiment simulates a large number of data requests by downloading data from the load generator through the transport subsystem, and collects experimental data for the following three models:

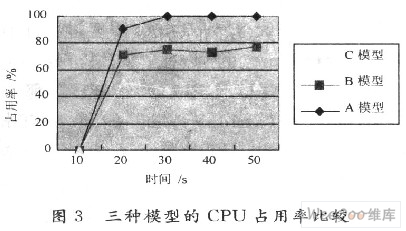

(1) The traditional multi-threaded blocking model, that is, each existing blocking is processed by a single request, which is represented by A in FIG. 3 and is simply referred to as an A model.

(2) A thread pool with a fixed number of threads. The number of initial trial threads is determined by 2 times the number of CPUs plus 2, that is, 10 initial threads, which are represented by B in FIG. 3, and are simply referred to as a B model.

(3) Using the thread pool dynamic management algorithm proposed in this paper, the number of initial trial threads is also 10, which is represented by C in Figure 3, and is referred to as C model.

4.2 Analytical data averaged

The data below are the averages obtained by nmon sampling and ninen analyser analysis.

(1) Comparison of CPU usage. As can be seen from Figure 3, in the A model, basically all CPU resources have been occupied. Because each thread serves a request, once a large number of requests arrive, a large number of threads are generated. In the B model, because the number of threads is fixed and has been created in advance, when the amount of requests is too large, the task queue will play a good buffer. The C model is the best, because the number of threads is always adjusted to the optimal number, and the use of the task pool effectively reduces the frequent memory application and release operations in the system.

(2) Free memory comparison. It can be easily analyzed from Figure 4. When the total amount of requests is the same, the memory usage of the A and B models is very close. However, in the C model, the size of the task pool and the thread pool are dynamically scaled, which improves the processing power of the system and naturally uses more memory.

(3) Network I/O traffic comparison. Figure 5 shows the network I/O situation of the three models. In the A model, because the blocking method is used, when the socket has no data to read, the thread will block waiting for the arrival of data, while others already have The sockets where the data arrives may not be processed, so the network throughput of the A model is relatively low. In the B model, the non-blocking and thread pool model is adopted. Once a socket is about to be blocked, the thread can quickly switch to other sockets that have data ready, which speeds up data reception and therefore improves. The transmission speed of the network. In the C model, the load on components such as memory and CPU is reduced, and the performance is improved. The dynamic task pool makes the system have better caching ability than the B model, so the C model is more understandable than the B model network throughput. . The system uses a l 000 Mb / s network card, which basically reaches the limit of the network card.

5 Conclusion

The size of the thread pool is dynamically adjusted based on the average wait time of each thread in the statistics thread pool and the current CPU usage. With this thread pool dynamic management algorithm, it can be well adapted to the sudden changes in customer requests on the Internet. When a large number of requests suddenly come, according to the algorithm principle, an appropriate amount of threads can be added to satisfy additional requests; when the number of requests is reduced, the number of threads is reduced, thereby reducing the pressure on the system. After experimental analysis and comparison, it can be concluded that after using the thread pool dynamic management algorithm, the CPU load pressure is effectively reduced, and the network throughput and overall system performance are improved. However, there are still many places where the management of the thread pool can be optimized. For example, the size of the thread pool is adjusted in steps of 2, but this step is based on experience and there is no good theoretical basis. At the same time, more statistical information can be added to the decision of the algorithm to improve the accuracy of the algorithm.

Here, the combination of P2P and CDN in the multimedia surveillance transmission system is introduced, and the semi-synchronous/semi-asynchronous mode is introduced. The system framework is designed, and the technologies such as task pool and thread pool are introduced to solve the problem between the media resource server and the original server. The network bottleneck of the transmission subsystem is designed with an effective thread pool dynamic management algorithm.

:

Nylon Tpe Wheel,Furniture Office Caster Wheel,Wheel For Office Chairs,Nylon Caster Wheel

BENYU CASTERS & WHEELS CO.,LTD , https://www.benyucaster.com